Who’s Fact-Checking AI?

The changing nature of governance in the AI age and why vector databases can help create a “time machine” to better manage its output.

Does AI need a staff? An AI fact-checker, an editor, an ethics advisor? The answer is yes, and tag, you’re it. Human oversight is the key to governing AI. But unless you’re Michael J. Fox and have a friend with a DeLorean time machine, you’ll need some tools to help capture, analyze, and trace the recommendations and actions taken by AI models.

Vector databases are an essential new technology that helps track predictions made by generative AI for traceability and governance. And thanks to their built-in ability to manage time, they’re a “time machine” for governing the rising use of AI.

Here’s why we need a dynamic, reliable database to understand AI’s output—and how to leverage it.

Trust AI, but Verify Generative AI’s Predictions

Ironically, if you ask AI “Who said ‘trust, but verify,’” it gives a single answer: former President Ronald Reagan. But that’s only partially correct. The original phrase is a rhyming Russian proverb, “Doveryay, no proveryay,” and Russian history scholar Suzanne Massie taught it to Reagan. So, he is not the originator but only the English popularizer.

This example illustrates the limitations of AI. Generative AI text transformers like Bing, Jasper, or Midjourney predict what you’ll like. Whether you trust its output is up to you. Or, if you’re a programmer, AI can generate code based on your descriptions; you judge, debug, and turn it into something useful.

But what if something goes wrong? What if AI makes an unintentionally biased prediction, and you use it? What if a customer accuses you of giving them the wrong information?

Many people ask, “Is AI reliable?” But it’s important to remember that AI makes predictions, not decisions.

In Power and Prediction: The Disruptive Economics of Artificial Intelligence, authors Ajay Agrawal, Joshua Gans, and Avi Goldfarb suggest we consider two sides of the decision-making equation: predictions and judgments. Predictions are statistically derived guesses, and AI is great at making these. Judgments — what to do about those guesses — are made by humans.

It’s up to humans to govern the entire process and ensure decisions are made accurately, safely, and without bias. All inputs, recommendations, and decisions must be stored to ensure well-managed AI-infused decision-making.

Vector Databases To Better Govern AI Predictions

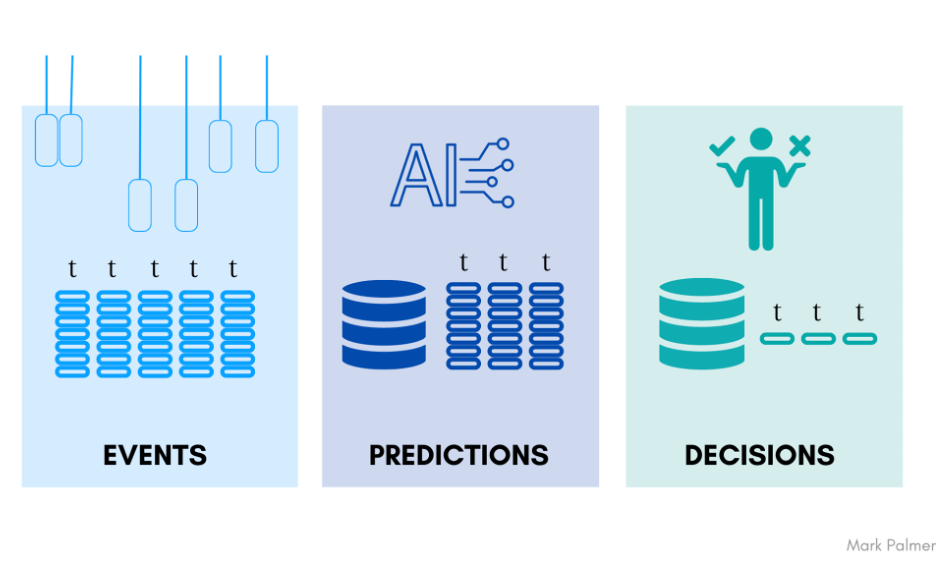

By storing input events, predictions made by AI, and human decisions, all organized by time, we get the essential information we need to govern and manage generative AI systems properly.

Vector databases are designed to manage data, for example, by time. This helps you naturally search data like recordings on your TV at home: reel back the tape, pause the action, and step through it, event by event, to replay events and data exactly as events happened. But unlike your DVR at home or backups from your IT department, time-series vector databases do this automatically for you by using timestamps as a first-order element of its storage mechanism.

A data time machine represented by a vector database helps end users trace their behavior and build trust in AI. When used with the output based on large language model (LLM) recommendations, data can be integrated, walled off from public data sources, and governed according to internal rules.

By decoupling data inputs, generative AI output, and human decision-making, a vector database can help scientists work more closely with data engineers and frontline staff to continually improve the interaction between humans and machines.

A Case Study: Generative AI for Call Center Conversational Intelligence

Imagine you contact a call center with a question about your mobile phone account. A large organization like a cable company, telecommunications carrier, or bank receives tens of thousands or even millions of calls daily, each with hundreds of “utterances” that make up a conversation.

Call center conversational intelligence is one of enterprise AI's most exciting use cases today. Conventionally, companies can only afford to sample customer conversations. They choose random calls, replay the tape, relisten, and analyze interactions to improve service.

According to generative AI company Talkmap, in one large bank, a staff of 200 call analysts can review just over 1% of the interactions their agents have every day.

Large language models and generative AI are the perfect tools for this task.

By automating speech-to-text transcriptions and predicting what customers are asking and what agents are saying, generative AI can provide insights into 100% of the conversations a typical call center holds and create insights that improve the customer experience and agent action. In much the same way you ask Bing to summarize an article, AI can privately and instantly summarize your last five calls for your agent. By highlighting your recent questions and concerns, your rep can respond with context and confidence.

AI is already hard at work in the background of our lives, analyzing call center conversations, responding to our voice commands, recommending our music and movies, trading our stocks, tweaking our manufacturing processes, and alerting our credit card providers that we’re probably not in Mexico City buying a car when we’ve never been there before.

Humans have carefully trained these tasks, recommendations, and alerts. But you need a time machine to ensure things are right when something goes wrong.

Trust AI, But Verify It

Trust is built on verification. Private LLMs and generative AI are rapidly being deployed in leading companies, which means any organization can build a system to apply AI on the front lines. Recording and digging into the origins of input data, recommendations, and decisions can be painstaking drudgery, but it doesn’t have to be. Vector databases help make recording data transparent to applications so you can find its references and data points instantly.

An AI time machine based on vector databases helps humans determine that the output of generative AI is accurate, safe, private, unbiased, and empathetic. They’re the key to not only trusting AI but verifying it, as well.